With the aim of democratizing AI technology, Korean startup NaraTek is set to launch ultra-cheap, high-performance neural processing units (NPUs) that could revolutionize the accessibility of AI hardware. How does NaraTek plan to ensure the Nara N1’s performance matches its affordability, what impact might the widespread availability of affordable NPUs have on AI innovation and application development, and how could initiatives like NaraTek’s reshape the landscape of AI education and experimentation for a broader audience?

Top Stories This Week

- Intel Sees ‘Huge’ AI Opportunities for Xeon—With and Without Nvidia – CRN

- Government Technology Reveals Who Is Restarting Three Mile Island to Power Data Centers

- Google Files Antitrust Complaint Against Microsoft Over Cloud Practices – National Technology

- China’s First High-Throughput Ethernet Protocol Standard Is Released

- Darpa Has A “Weird” Plan To Aid People In Authoritarian Regimes Using Hidden Networks

- Rtos Vs Linux: The IoT Battle Extends from Software to Hardware | Betanews

- The Raspberry Pi Of AI: Korean Startup Wants To Bring Ultra-Cheap, High Performance NPUs …

- Revolutionizing Quantum Devices with Oak Ridge’s Groundbreaking Fabrication Technology

- Japan R&D Brings Powerful Diamond Semiconductors Closer to Reality – KrASIA

- Flexible RISC-V Processor: Could Cost Less Than a Dollar – IEEE Spectrum

Hardware Business News

Google Files Antitrust Complaint Against Microsoft Over Cloud Practices – National Technology

In a recent development, Google has taken legal action against Microsoft, accusing the tech giant of employing unfair licensing tactics that hinder competition within the cloud computing industry. Google alleges that Microsoft leverages its dominance in operating systems and productivity software to bind customers to its Azure cloud platform, making it challenging and costly for users to switch to alternative services. This clash between the tech giants raises crucial questions: How might Google’s antitrust complaint impact the landscape of cloud computing competition in Europe and beyond? What implications could a potential regulatory intervention have on the practices of major players in the industry? How will this legal dispute influence the choices available to businesses and public sector organizations seeking cloud services?

Government Technology Reveals Who Is Restarting Three Mile Island to Power Data Centers

Microsoft’s decision to revive a reactor at the Three Mile Island Nuclear Generating Station signifies a significant shift towards sustainable energy practices in the tech industry. This bold move, in collaboration with Constellation Energy, marks a pivotal moment in Microsoft’s journey towards carbon negativity. However, the process of reactivating the dormant Unit 1 reactor is not without its challenges, requiring regulatory approvals and extensive refurbishments. As Microsoft pioneers this innovative approach to power its data centers, one might wonder about the environmental impact of this transition. How will the revival of the Three Mile Island reactor contribute to Microsoft’s carbon-negative goals? What technological advancements will be implemented during the refurbishment process to ensure safety and efficiency? And how might this partnership inspire other tech giants to explore similar sustainable energy solutions for their operations?

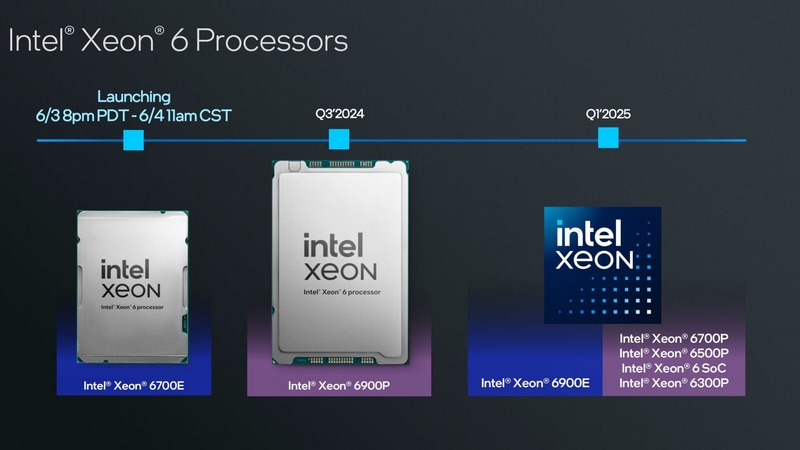

Intel Sees ‘Huge’ AI Opportunities for Xeon—With and Without Nvidia – CRN

Intel’s focus on AI solutions using its Xeon processors presents a promising outlook for the tech industry. With advancements aimed at enhancing AI capabilities independently and in collaboration with Nvidia, Intel is strategically positioning itself to cater to diverse AI needs. As the company gears up to navigate the evolving AI landscape, several questions arise: How do Intel’s specialized AI accelerators integrated into Xeon processors improve performance compared to traditional processors, what specific sectors stand to benefit the most from Intel’s AI advancements, and in what ways does Intel plan to ensure seamless integration of its AI solutions with Nvidia’s technology to offer customers a comprehensive AI ecosystem?

Hardware Engineering News

RTOS Vs Linux: The IoT Battle Extends from Software to Hardware

In the ever-evolving landscape of technology, the debate between Real-Time Operating Systems (RTOS) and Linux for Internet of Things (IoT) applications has been a topic of interest. As IoT devices become more prevalent in our daily lives, the choice between these two software platforms can significantly impact the performance and functionality of connected devices. How do the real-time capabilities of RTOS compare to the flexibility of Linux in IoT applications, what are the key considerations for developers when choosing between RTOS and Linux for their IoT projects, and how does this software battle extend its influence to the hardware components of IoT devices?

DARPA Has A “Weird” Plan To Aid People In Authoritarian Regimes Using Hidden Networks

Could DARPA’s covert network project revolutionize communication for individuals in authoritarian regimes by providing secure channels for information exchange, how do decentralized networks differ from traditional internet infrastructure in ensuring communication privacy, and what are the potential ethical implications of implementing such hidden communication technologies in oppressive societies?

China’s First High-Throughput Ethernet Protocol Standard Is Released

China has recently unveiled its first high-throughput Ethernet protocol standard, a significant step towards establishing a robust framework for artificial intelligence networks. This development is poised to revolutionize the landscape of network communications, akin to how the Android system transformed the smartphone industry. With this groundbreaking protocol in place, the possibilities for AI applications and network efficiency are boundless. How will this new Ethernet protocol standard impact the speed and scalability of AI networks, what are the key features that differentiate it from existing protocols, and how might its adoption influence the global AI technology sector?

Hardware R&D News

Japan R&D Brings Powerful Diamond Semiconductors Closer to Reality – KrASIA

Diamond power semiconductors, with their exceptional capabilities, are on the brink of revolutionizing various industries, from electric vehicles to nuclear power. Japan’s significant advancements in diamond semiconductor technology are paving the way for their commercialization, promising a new era of high-performance electronics. As these innovations unfold, one might wonder about the specific challenges that researchers have overcome to bring diamond semiconductors closer to reality, how the performance of diamond semiconductors compares to existing materials like silicon carbide and gallium nitride, and what impact the widespread adoption of diamond semiconductors could have on the future of technology and industry.

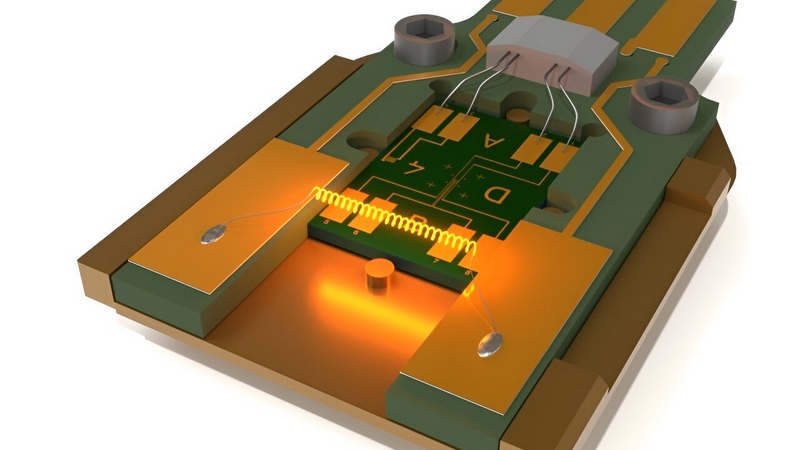

Revolutionizing Quantum Devices with Oak Ridge’s Groundbreaking Fabrication Technology

Researchers at Oak Ridge National Laboratory have developed a groundbreaking technology that allows for the precise placement of individual atoms, potentially revolutionizing the fabrication of materials for quantum devices. Using an advanced microscopy tool called the synthescope, scientists can now manipulate materials at the atomic level, offering unprecedented control over their properties. This new approach has significant implications for quantum computing, communication, and sensing, as it enables the creation of materials that harness quantum phenomena like entanglement. As this method evolves, it opens the door to new possibilities in material science and microelectronics. How will atomic-scale fabrication impact the development of next-generation quantum devices? What challenges remain in scaling this technology for broader applications? And how could this breakthrough influence industries beyond quantum computing, such as energy or telecommunications?

Open-Source Hardware News

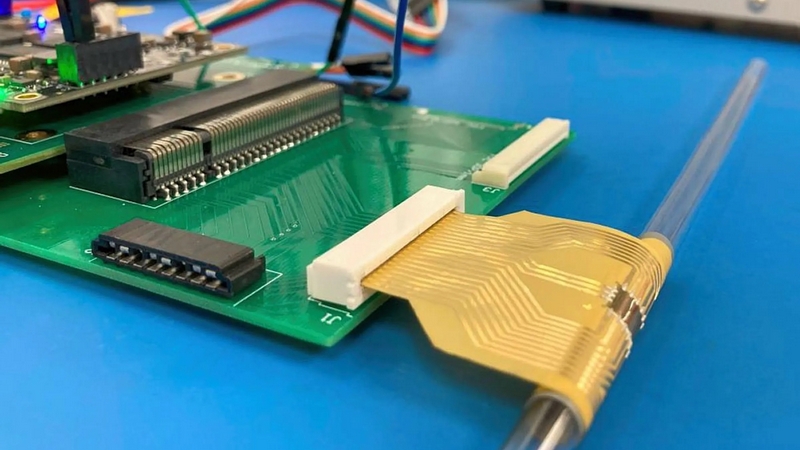

Flexible RISC-V Processor: Could Cost Less Than a Dollar – IEEE Spectrum

In a groundbreaking development, scientists have unveiled a flexible and programmable microchip, the Flex-RV, which operates on a metal-oxide semiconductor, IGZO, rather than traditional silicon. This innovation opens up a realm of possibilities for applications like wearable healthcare electronics and soft robotics. The open-source RISC-V architecture of the microchip hints at a cost-effective solution for various industries. How does the flexibility of the Flex-RV microchip enable its use in on-skin computers and brain-machine interfaces, what advantages does the RISC-V architecture offer over proprietary architectures like x86 and Arm, and how might the integration of machine learning capabilities impact the future of flexible electronics?